AWS Deep Dives

Brief explanations of AWS services, organized by category for comprehensive understanding.

Security Services

Tools for identity and access management in AWS.

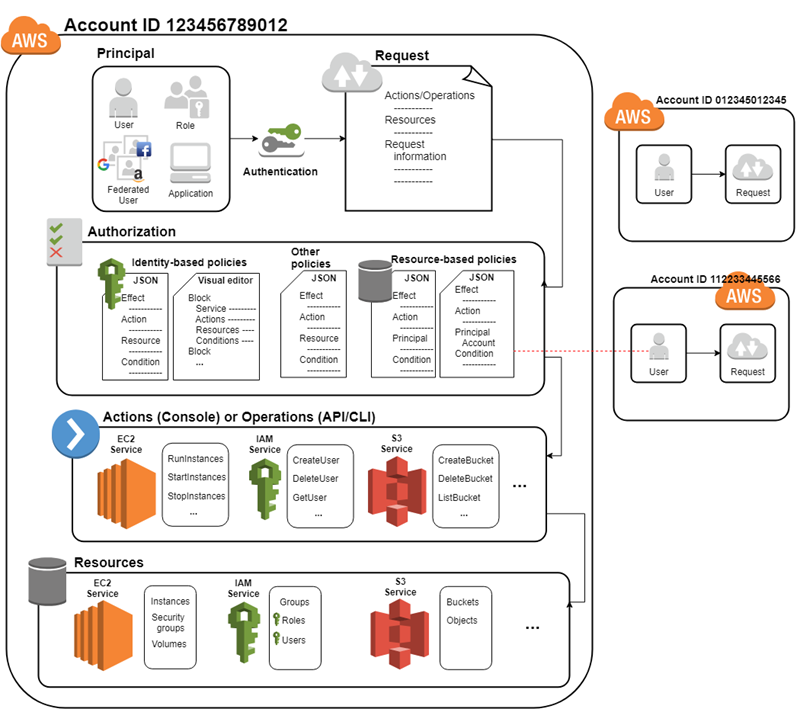

What is IAM?

AWS Identity and Access Management (IAM) is the global service that controls who (users, roles, applications) can perform what actions (e.g., read, write) on which AWS resources (e.g., S3 buckets, EC2 instances). Launched in 2011, it’s free, scalable, and integrates with all AWS services. IAM is the foundation of AWS security—enforcing permissions through policies to protect your account and enabling simple users/groups or complex enterprise federation.

How IAM Works

IAM operates as a centralized control plane—no regions, no VPCs. Every API request (e.g., s3:GetObject) is evaluated in real time:

- Identities: Users (e.g.,

alice), roles (e.g.,ec2-role), or federated identities make requests. - Policies: JSON documents define permissions—attached to identities or resources.

- Evaluation: IAM checks all policies, returning

AlloworDeny.- Default is implicit deny—nothing is allowed unless explicitly permitted.

- Explicit

Denyoverrides anyAllow.

Example: User bob tries s3:GetObject on my-bucket. IAM checks both his policy and the bucket’s policy—grants access only if both align.

Core Components

- Users: Permanent identities for humans or apps.

Credentials: console password, access keys (AKIA...), MFA. - Groups: Collections of users (e.g.,

developers) for shared policies. - Roles: Temporary identities for AWS services (e.g., EC2) or cross-account access. Assumed via STS with a trust policy:

{ "Version": "2012-10-17", "Statement": { "Effect": "Allow", "Principal": {"Service": "ec2.amazonaws.com"}, "Action": "sts:AssumeRole" } } - Policies: JSON permissions—e.g.:

Types: AWS Managed ({ "Version": "2012-10-17", "Statement": { "Effect": "Allow", "Action": "s3:ListBucket", "Resource": "arn:aws:s3:::my-bucket" } }AmazonS3ReadOnlyAccess), Customer Managed (custom), Inline (embedded).

IAM Structure: Controls access—who, what, which AWS resource. Evaluates API requests in real-time against policies.

Key Features

- Multi-Factor Authentication (MFA): Enhances user/root account security by requiring a second factor (e.g., app code, YubiKey).

- Identity Federation: Connects external identities to IAM roles for SSO:

- SAML 2.0: Enterprise (e.g., Active Directory). Upload metadata:

Users sign in ataws iam create-saml-provider --saml-metadata-document file://adfs-metadata.xmlhttps://signin.aws.amazon.com/saml. - OIDC: Web apps (e.g., Google, GitHub). Configure:

aws iam create-open-id-connect-provider --url https://accounts.google.com --client-id-list "123.apps.googleusercontent.com" - Use Case: Developer logs into Google → assumes an IAM role → accesses AWS console without an IAM user.

- SAML 2.0: Enterprise (e.g., Active Directory). Upload metadata:

- Attribute-Based Access Control (ABAC): Tag-driven permissions. Example:

User{ "Effect": "Allow", "Action": "s3:*", "Resource": "*", "Condition": { "StringEquals": { "s3:ResourceTag/env": "dev", "aws:PrincipalTag/team": "devs" } } }alice(tagteam=devs) accesses S3 buckets taggedenv=dev. - Cross-Account Access: Role in Account A trusts Account B:

Bob assumes it:{ "Principal": {"AWS": "arn:aws:iam::987654321098:user/bob"} }aws sts assume-role --role-arn arn:aws:iam::123456789012:role/audit-role

Practical Examples

-

Secure an S3 Bucket: Create IAM user

alice:

Attach policy:aws iam create-user --user-name aliceaws iam attach-user-policy --user-name alice --policy-arn arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess -

EC2 Access to S3: Create role

ec2-s3:

Attachaws iam create-role --role-name ec2-s3 --assume-role-policy-document file://ec2-trust.jsonAmazonS3FullAccess, link to EC2 instance.

Additional Concepts & Best Practices

- Groups cannot contain other groups: IAM groups are collections of users only; you cannot nest groups within other groups.

- Policy Sid (Statement ID):

Sidis an optional field in policy statements for uniquely labeling each statement for easier management and auditing. - Principle of Least Privilege: Always grant only the minimum permissions required for users, groups, or roles to reduce security risk.

- Resource-Based Policies: Some AWS resources such as S3, SNS, and SQS support policies directly attached to the resource, controlling access separately from identity policies.

- IAM Permission Boundaries: Set boundaries at the user or role level to define the maximum permissions they can be granted, acting as a safeguard on top of regular policy attachments.

- Policy Evaluation Logic: IAM determines access by checking Explicit Deny, Organization Service Control Policies (SCPs, if AWS Organizations is used), Resource-based Policies, Permission Boundaries, and finally Identity-based Policies.

- Certificates with IAM: If you get SSL/TLS certificates from a third-party provider, you can import them into AWS Certificate Manager (ACM) or upload them to the IAM Certificate Store for use with specific AWS services.

Integration with AWS Identity Services

- AWS Cognito: Provides user directory (User Pools) for sign-up/sign-in and identity federation (Identity Pools) for temporary AWS credentials. Enables authentication for web, mobile, and federated users using social or enterprise IdPs. Recommended by AWS for managing application users.

- AWS Directory Service:

- Managed Microsoft AD: Fully managed Active Directory supporting integration with on-premises AD for enterprise-scale workloads and authentication.

- AD Connector: Proxy service to connect AWS resources to your on-premises Microsoft AD without storing data in the cloud.

- Simple AD: Basic standalone directory for simple use cases; not connectable to on-prem AD.

Limits and Pricing

Limits: Users: 5,000; Roles: 1,000; Groups: 300; Policies/user: 10; Policy size: 6,144 chars—soft limits, request increases.

Pricing: Core IAM: free. MFA: $12.99 (virtual), $20-$50 (hardware). Federation/STS: free (external IdP costs vary).

Compute Services

Scalable compute resources for running applications and workloads in AWS.

Overview

Amazon Elastic Compute Cloud (EC2) is AWS’s flagship compute service, offering resizable virtual servers in the cloud since 2006. It’s the backbone for running applications, hosting workloads, and scaling compute capacity without the overhead of physical hardware. EC2 provides granular control over CPU, memory, storage, and networking, making it a versatile choice for everything from web servers to machine learning clusters. Unlike serverless options like Lambda, EC2 requires you to manage the OS, patching, and scaling—think of it as renting a customizable computer in AWS’s data centers, billed by the second.

Architecture and Core Components

EC2 instances run on AWS’s global infrastructure, leveraging Xen or Nitro hypervisors (depending on instance type) across Availability Zones (AZs). Instances are launched from Amazon Machine Images (AMIs)—preconfigured templates with OS and software (e.g., Amazon Linux 2, Ubuntu 20.04). The Nitro System, introduced in 2017, offloads networking, storage, and security to dedicated hardware, boosting performance and isolation. Key components include:

- Instances: Virtual machines with defined resources (e.g., t3.micro: 2 vCPUs, 1 GB RAM). Launched in a VPC subnet with an Elastic Network Interface (ENI).

- AMIs: Stored in S3, AMIs are regional but shareable across accounts—create custom AMIs by snapshotting EBS volumes.

- Instance Metadata: Accessible at

http://169.254.169.254/latest/meta-data/—provides instance ID, IP, etc., for automation.

Instance Types and Families

EC2 offers a dizzying array of instance types, grouped into families optimized for specific workloads. Each type balances vCPU, memory, network, and storage:

- General Purpose (T, M): T3 (burstable, credits for CPU spikes, $0.0104/hr t3.micro), M5 (balanced, 25 Gbps networking)—e.g., web apps, small DBs.

- Compute Optimized (C): C5 (high CPU, 3.0 GHz Intel Xeon, up to 100 Gbps)—e.g., gaming servers, HPC.

- Memory Optimized (R, X): R5 (high RAM, 96 vCPUs, 768 GB)—e.g., in-memory DBs like Redis.

- Storage Optimized (I, D): I3 (NVMe SSDs, 15 TB local)—e.g., NoSQL DBs, data warehouses.

- GPU (G, P): G4 (NVIDIA T4, 16 GB GPU RAM)—e.g., ML training, video rendering.

Choosing the right type is an art—over-provisioning wastes money, under-provisioning kills performance. Use CloudWatch metrics (CPU, memory via agent) to right-size.

Storage Options

EC2 instances pair with storage for persistence and speed:

- EBS (Elastic Block Store): Network-attached SSD/HDD volumes (e.g., gp3: 3,000 IOPS base, $0.08/GB). Snapshots in S3 enable backups and AMI creation. Multi-Attach (io2) allows clustering.

- Instance Store: Ephemeral, local SSDs (e.g., 7.5 TB on i3.large)—high IOPS (up to 3.3M), lost on stop/termination. Use for temp data or caches.

- EFS/S3: Mountable file systems or object storage via ENI—EFS for shared files, S3 for off-instance data.

EBS is detachable—stop an instance, swap volumes, or resize (e.g., gp2 to gp3) without downtime.

Pricing and Purchase Options

EC2’s pricing is complex but flexible:

- On-Demand: Pay-per-second ($0.0104/hr t3.micro to $3+/hr GPU)—no commitment, ideal for testing.

- Reserved Instances (RI): 1-3 year contracts, ~40-70% off (e.g., t3.medium 3-yr All Upfront: ~$0.015/hr)—predictable workloads.

- Spot Instances: Bid on spare capacity, up to 90% off (e.g., c5.large ~$0.03/hr)—interruptible, use for batch jobs with Spot Fleets.

- Savings Plans: Commit to compute spend ($1/hr), applies across EC2/Lambda/Fargate—more flexible than RIs.

Free tier: 750 hrs/month of t2/t3.micro (1 yr)—great for learning. Data transfer out: $0.09/GB after 100 GB free.

Networking and Scaling

EC2 lives in a VPC—public/private subnets dictate access. ENIs provide IPs (private + optional Elastic IP); enhanced networking (ENA, up to 100 Gbps) boosts throughput. Scaling comes via:

- Auto Scaling Groups (ASG): Launch/terminate instances based on CloudWatch metrics (CPU > 70%)—spans AZs for HA.

- Elastic Load Balancer (ELB): ALB routes HTTP to EC2—e.g., path-based routing (

/apivs./web).

Example: A web app scales from 2 to 10 t3.medium instances across 2 AZs, balanced by ALB.

Use Cases and Scenarios

EC2’s versatility shines:

- Web Hosting: Nginx on t3.medium, EBS for persistence—scale with ASG.

- Batch Processing: Spot Instances crunch data (e.g., video encoding)—checkpoint to S3.

- ML Training: P3 instances with GPUs—EBS for datasets, S3 for outputs.

Edge Cases

Instance Limits: 20 On-Demand per region (soft)—request increases. Spot Interruptions: 2-minute warning—save state to EBS/S3. EBS Bottlenecks: High IOPS needs io2 (16,000 IOPS base)—costly.

Overview

AWS Lambda, introduced in 2014, is a serverless compute service that runs code in response to events without provisioning or managing servers. It’s a paradigm shift from EC2—AWS handles scaling, patching, and infrastructure, while you focus on code (functions). Lambda executes in ephemeral containers, billed by invocation and duration (ms), making it ideal for event-driven, short-lived tasks. From resizing S3 images to processing IoT streams, Lambda’s stateless nature and auto-scaling make it a cornerstone of modern architectures.

Architecture and Execution

Lambda’s backend is a black box—AWS spins up containers (Firecracker microVMs) on demand, running your code in isolated environments. Key elements:

- Functions: Code + config (e.g., Python 3.9, 512 MB RAM)—uploaded as ZIP or container images (up to 10 GB).

- Execution Environment: Includes runtime, libraries, and /tmp (512 MB)—stateless, but VPC adds ENIs.

- Triggers: S3, API Gateway, CloudWatch Events—events invoke functions asynchronously or synchronously.

Cold starts (initial container spin-up) add latency (ms to seconds)—minimized with Provisioned Concurrency or lightweight runtimes (e.g., Node.js vs. Java).

Limits and Configuration

Lambda has strict boundaries:

- Timeout: 15 minutes max—long tasks need EC2 or Step Functions.

- Memory: 128 MB to 10 GB—CPU scales proportionally (e.g., 1,769 vCPUs at 10 GB).

- Concurrency: 1,000 per region (soft)—bursts higher, throttles excess (use Reserved Concurrency).

Layers extend functions—e.g., share NumPy across functions. Environment variables configure dynamically (e.g., API keys).

Pricing

Pay-per-use: $0.20/1M requests, $0.0000167/GB-second. Free tier: 1M requests, 400,000 GB-seconds/month. Example: 1M 1-second runs at 1 GB = $16.67—cheaper than EC2 for sporadic tasks.

Use Cases

Event Processing: S3 upload triggers image resize. API Backends: API Gateway + Lambda for REST endpoints. Cron Jobs: CloudWatch schedules nightly tasks—e.g., DB cleanup.

Edge Cases

Cold Starts: Java + VPC = 10s delay—use Node.js or Provisioned Concurrency. Throttling: 1,000 limit blocks bursts—queue with SQS.

Overview

AWS Fargate, launched in 2017, is a serverless compute engine for containers, eliminating the need to manage EC2 instances while running Dockerized workloads. Built atop ECS (Elastic Container Service) and later extended to EKS (Elastic Kubernetes Service), Fargate abstracts the underlying infrastructure—define your container’s CPU and memory, and AWS handles provisioning, scaling, and patching. It’s a middle ground between EC2’s control and Lambda’s simplicity, ideal for microservices, batch jobs, or stateless apps needing more runtime flexibility than Lambda’s 15-minute limit.

Architecture and Core Components

Fargate runs containers in a managed cluster—AWS provisions virtualized compute resources behind the scenes, likely using Firecracker microVMs (similar to Lambda). Unlike EC2-based ECS, where you manage instances, Fargate tasks launch directly into a VPC with dedicated ENIs (Elastic Network Interfaces) for networking. Key components include:

- Tasks: The running container instance—defined by a Task Definition (JSON) specifying image (e.g.,

nginx:latest), CPU (256-16,384 units), memory (0.5-120 GB), and ports. - Services: Maintain a desired task count—e.g., 3 Nginx containers—with auto-scaling and load balancing via ALB.

- Cluster: A logical grouping of tasks—Fargate clusters don’t expose EC2, unlike ECS EC2 mode.

Tasks are isolated—each gets its own ENI in your VPC subnet, ensuring network security and private IPs. AWS handles OS updates, container orchestration, and resource allocation transparently.

Configuration and Limits

Fargate offers fine-grained resource allocation—CPU in 256-unit increments (1 vCPU = 1,024 units), memory in GB (e.g., 2 vCPUs + 4 GB). Limits include:

- Task Size: 256 CPU units (0.25 vCPU) to 16,384 (16 vCPUs), 512 MB to 120 GB RAM—combinable in specific ratios (e.g., 4 vCPUs needs 8-32 GB).

- Storage: 20-200 GB ephemeral per task (no EBS/Instance Store)—use EFS for persistence.

- Concurrency: 100 tasks per service default—scales with region limits (request increases).

Task Definitions support multiple containers (e.g., app + sidecar), logs route to CloudWatch, and IAM roles grant service access (e.g., S3).

Pricing

Fargate bills per-second for vCPU and GB-hour: $0.04048/vCPU-hour, $0.004445/GB-hour (us-east-1). Example: 1 vCPU + 2 GB for 1 hour = $0.04937—pricier than EC2 but no management overhead. Free tier: 400,000 GB-seconds/month shared with Lambda. Data transfer out: $0.09/GB after 100 GB free.

Networking and Scaling

Fargate integrates with VPC—tasks get private IPs (public via NAT/IGW). Use awsvpc mode—each task has an ENI, supporting Security Groups (e.g., port 80 inbound). Scaling happens via:

- ECS Services: Auto-scaling based on CloudWatch metrics (e.g., CPU > 70%)—adjusts task count.

- ALB/NLB: Load balances traffic—e.g., ALB routes

/apito Fargate tasks.

Example: Run 5 Node.js containers behind ALB, scaling to 10 on demand—zero server management.

Use Cases and Scenarios

Fargate shines where serverless meets containers:

- Microservices: Deploy 10 REST API containers—each 0.5 vCPU, 1 GB—scaled via ECS Service.

- Batch Jobs: Run data processing tasks—e.g., ETL pipeline with 4 vCPUs, EFS for input/output.

- CI/CD: Jenkins workers on Fargate—spin up on demand, shut down when idle.

Edge Cases and Gotchas

No Instance Access: Can’t SSH—debug via logs or exec (ECS). Ephemeral Storage: 200 GB max—EFS for more, but adds cost. Cold Starts: Slower than Lambda (seconds)—pre-warm with min task count. Pricing: Overkill for steady-state workloads—EC2 Spot cheaper.

Integration with Other Services

ECS/EKS: Fargate powers tasks—ECS for simplicity, EKS for Kubernetes. CloudWatch: Logs and metrics—e.g., CPU utilization. EFS: Persistent storage—e.g., shared configs. ALB: HTTP routing—e.g., path-based microservices.

Overview

Amazon Elastic Container Service (ECS), launched in 2014, is a fully managed container orchestration service that simplifies running Docker containers at scale. It’s AWS’s homegrown alternative to Kubernetes (EKS), offering tight integration with EC2 or Fargate for compute, and supporting microservices, batch jobs, and CI/CD pipelines. Unlike Lambda’s serverless simplicity or EC2’s raw control, ECS abstracts container management—define tasks, services, and clusters, and AWS handles scheduling, scaling, and health. It’s versatile, cost-effective, and a staple for containerized workloads.

Architecture and Core Components

ECS operates as a regional service, orchestrating containers across a cluster—either EC2 instances you manage or Fargate’s serverless compute. It uses a control plane (AWS-managed) and data plane (your compute). Key components:

- Clusters: Logical grouping of tasks/services—e.g.,

my-cluster—spans VPC subnets. - Task Definitions: JSON blueprints—e.g.,

nginx:latest, 0.5 vCPU, 1 GB RAM—define containers, ports, volumes. - Tasks: Running instances of Task Definitions—e.g., one-off job or long-running app.

- Services: Maintain task count—e.g., 3 Nginx tasks—with load balancing and auto-scaling.

- Container Agent: Runs on EC2—e.g.,

/ecs-agent—communicates with ECS control plane.

EC2 mode requires instance management (AMIs, patching); Fargate mode abstracts it—tasks get ENIs in your VPC. Scheduling uses capacity providers—e.g., Fargate vs. EC2 Spot.

Launch Types and Configuration

ECS supports two launch types:

- EC2: You manage instances—e.g., t3.medium cluster, 20 tasks max—full control, cheaper.

- Fargate: Serverless—e.g., 0.5 vCPU, 2 GB per task—256-16,384 CPU units, 0.5-120 GB RAM.

Config includes networking (awsvpc, bridge), IAM roles (task execution, task role), and logging (CloudWatch). Limits: 10,000 tasks/cluster, 120 tasks/service—soft limits, request increases.

Pricing

No direct ECS cost—pay for compute: EC2 (e.g., $0.0104/hr t3.micro), Fargate ($0.04048/vCPU-hr, $0.004445/GB-hr). Free tier: 400,000 GB-seconds/month (Fargate). Example: 3 tasks, 1 vCPU, 2 GB, 24 hrs = $3.55/day (Fargate)—EC2 cheaper with RIs.

Networking and Scaling

ECS integrates with VPC—awsvpc gives tasks ENIs (Security Groups, private IPs). Scaling via:

- Services: Desired count—e.g., 5 tasks—auto-scales with CloudWatch (CPU > 70%).

- ALB/NLB: Routes traffic—e.g., ALB path

/apito ECS service. - Capacity Providers: Mix EC2/Fargate—e.g., 80% Fargate, 20% Spot.

Example: 10-task service behind ALB scales to 20 on demand—Fargate handles provisioning.

Use Cases and Scenarios

Microservices: 5 APIs—e.g., user-service, 2 tasks each, ALB routing. Batch Jobs: ETL—e.g., 50 Fargate tasks process S3 data. CI/CD: Jenkins—e.g., EC2 cluster runs build containers.

Edge Cases and Gotchas

EC2 Overhead: Patching, scaling manual—use ASG. Fargate Cold Starts: Seconds—pre-warm with min tasks. Task Limits: 10,000/cluster—split large apps. Networking: awsvpc ENI limits—plan subnet IPs.

Integration with Other Services

Fargate: Serverless tasks—e.g., 1 vCPU jobs. ALB: HTTP routing—e.g., /users. CloudWatch: Logs/metrics—e.g., CPU alarms. EFS: Shared storage—e.g., /mnt/efs. IAM: Task roles—e.g., S3 access.

Overview

Elastic Load Balancer (ELB), introduced in 2009, is AWS’s managed load balancing service, distributing traffic across compute targets (EC2, Fargate, Lambda, etc.) to ensure availability, scalability, and fault tolerance. It offers four variants: Application Load Balancer (ALB) for Layer 7 (HTTP/HTTPS), Network Load Balancer (NLB) for Layer 4 (TCP/UDP), Gateway Load Balancer (GLB) for Layer 3 (IP routing), and Classic Load Balancer (CLB) for legacy apps. Fully managed and auto-scaling, ELB integrates with VPCs and spans AZs, offloading traffic management from your compute resources.

Architecture and Core Components

ELB runs in AWS’s edge and regional network, a distributed system (likely reverse proxies or routers) with no single point of failure. Common components across types:

- Load Balancer: Entry point—e.g.,

my-elb-123.us-east-1.elb.amazonaws.com—lives in a VPC. - Listeners: Protocols/ports—e.g., HTTP:80, TCP:443—route to targets.

- Target Groups: Compute endpoints—e.g., EC2, IPs—with health checks (except GLB).

Deployed in subnets—public (IGW) or private (NAT). Cross-zone balancing spreads traffic across AZs—optional for cost control.

ELB Variants

Each ELB type serves distinct needs:

- Application Load Balancer (ALB, 2016): Layer 7—HTTP/HTTPS routing via path (

/api), host (api.example.com), headers. Supports WebSockets, Lambda targets. Ideal for microservices, web apps. - Network Load Balancer (NLB, 2017): Layer 4—TCP/UDP, ultra-low latency (100s of microseconds), millions of requests/sec. Static IPs, preserves source IP. Suits high-throughput, real-time apps.

- Gateway Load Balancer (GLB, 2020): Layer 3—IP traffic routing to third-party appliances (e.g., firewalls, IDS). Transparent, uses GENEVE protocol. For network security/inspection.

- Classic Load Balancer (CLB, 2009): Legacy—Layer 4 (TCP) or 7 (HTTP). Basic balancing, no advanced routing. Deprecated—use ALB/NLB for new apps.

Features and Configuration

ALB: Rules (100/listener)—e.g., /users to ECS, sticky sessions (AWSALB cookie), SSL via ACM. NLB: Static IPs per AZ, TLS termination—e.g., TCP:443 to EC2. GLB: Appliance targets—e.g., Palo Alto VM, no health checks (endpoint-managed). CLB: Basic HTTP/TCP—e.g., port 80 to EC2. Limits: ALB 1,000 targets/group, NLB 200, CLB 100—soft limits.

Health Checks: ALB/CLB—HTTP 200 on /health; NLB—TCP ping; GLB—none. SSL: ALB/NLB/CLB—ACM or custom certs—e.g., TLS 1.3.

Pricing

Varies by type—pay-per-hour + capacity:

- ALB: $0.0225/hr + $0.008/LCU-hr (connections, bytes, rules)—e.g., 10 LCUs, 24 hrs = $0.78/day.

- NLB: $0.0225/hr + $0.006/NCU-hr (connections, bandwidth)—e.g., 5 NCUs = $0.54/day.

- GLB: $0.025/hr + $0.007/GCU-hr (traffic)—e.g., 5 GCUs = $0.58/day.

- CLB: $0.025/hr + $0.008/GB processed—e.g., 10 GB = $0.68/day.

Free tier: 750 hrs/month (shared). Data transfer: $0.09/GB out.

Networking and Scaling

VPC-integrated—public/private subnets. Scaling is automatic—e.g., ALB handles 10M requests/sec. Targets:

- ALB: Instance, IP, Lambda—e.g.,

i-12345678,/apito Fargate. - NLB: Instance, IP—e.g.,

10.0.1.5, TCP:3306 to RDS proxy. - GLB: IP—e.g.,

192.168.1.10to firewall appliance. - CLB: Instance only—e.g.,

i-12345678.

Example: ALB routes /web to 5 EC2, NLB sends TCP:443 to 10 Fargate—scales with load.

Use Cases and Scenarios

ALB: Microservices—e.g., /auth to ECS, HTTPS web apps. NLB: Real-time—e.g., gaming UDP to EC2, RDS proxy. GLB: Security—e.g., route VPC traffic via NGFW. CLB: Legacy—e.g., HTTP to old EC2 cluster.

Edge Cases and Gotchas

ALB: 100-rule limit—complex apps need multiple ALBs. NLB: Static IP cost—e.g., Elastic IP fees if detached. GLB: Appliance health—manual failover, no checks. CLB: Deprecated—lacks WebSockets, slow updates. Cross-Zone: Data cost—e.g., $0.01/GB AZ-to-AZ—disable if local. Drain: ALB/NLB—300s delay—tune for slow clients.

Integration with Other Services

EC2/ASG: ALB/NLB/CLB targets—e.g., scale 2-10 instances. ECS/Fargate: ALB/NLB—e.g., /api to service. Lambda: ALB—e.g., serverless proxy. CloudWatch: Metrics—e.g., ActiveConnectionCount, 5xx alarms. ACM: SSL—e.g., *.example.com. WAF: ALB—e.g., block XSS. VPC: GLB—e.g., route via appliances.

Overview

Auto Scaling Groups (ASG), part of AWS Auto Scaling since 2009, dynamically adjust the number of EC2 instances in a group based on demand, ensuring availability and cost efficiency. Unlike ECS services or Lambda’s auto-scaling, ASG gives you fine-grained control over instance provisioning—ideal for stateful apps, web servers, or batch processing. It pairs with ELB for load distribution and CloudWatch for triggers, making it a compute workhorse for elastic workloads.

Architecture and Core Components

ASG operates regionally, managing EC2 instances across AZs in a VPC. It’s a control layer atop EC2—no standalone compute. Key components:

- Launch Template/Configuration: Defines instance—e.g., t3.medium, AMI, EBS—replaces older Launch Configs.

- Group: Set of instances—e.g., 2-10 t3.micro—min, max, desired capacity.

- Scaling Policies: Rules—e.g., CPU > 70% adds 2 instances—simple, step, or target tracking.

Instances launch in subnets—e.g., 1 per AZ—health monitored via ELB or EC2 status. Termination respects oldest/newest or custom logic.

Features and Configuration

Policies: Target tracking (e.g., 50% CPU), step scaling (e.g., +2 at 80%), scheduled (e.g., 10 instances at 9 AM). Cooldown: Delay—e.g., 300s—prevents thrashing. Mixed Instances: Multiple types—e.g., t3 + c5, Spot + On-Demand. Limits: 20 instances default—soft limit.

Pricing

Free—pay for EC2: $0.0104/hr t3.micro (On-Demand), Spot ~$0.003/hr. Example: 5 t3.micro, 24 hrs = $1.25/day (On-Demand)—Spot slashes costs.

Networking and Scaling

ASG ties to VPC—subnets define AZ spread. Scaling triggers via:

- CloudWatch: Metrics—e.g.,

CPUUtilization,RequestCountPerTarget. - ELB: Health-based—e.g., replace unhealthy instances.

- Manual: Set desired—e.g., 8 instances now.

Example: Web app scales 2-10 instances across 2 AZs, ALB balances—CPU > 70% adds 2.

Use Cases and Scenarios

Web Hosting: Nginx cluster—e.g., 3-15 instances, ALB front. Batch Processing: Spot instances—e.g., 50 crunch data overnight. HA: Multi-AZ—e.g., min 2 per AZ.

Edge Cases and Gotchas

Cooldown: Slow response—e.g., 300s delays scaling. Spot Termination: 2-min warning—checkpoint often. AZ Imbalance: Subnet size limits—e.g., /28 caps at 14 IPs. Health Checks: ELB lag—use EC2 status for speed.

Integration with Other Services

EC2: Instance pool—e.g., t3.micro. ALB/NLB: Traffic spread—e.g., /web. CloudWatch: Triggers—e.g., CPU alarms. EBS: Persistent volumes—e.g., attach on launch. IAM: Instance roles—e.g., S3 access.

Overview

AWS Batch, launched in 2016, is a managed service for running batch computing workloads at scale, automating job scheduling and resource provisioning. Built on ECS, it’s tailored for data processing, simulations, or ETL—think “HPC lite” without cluster management. Unlike ECS’s general-purpose orchestration, Batch focuses on queue-based, finite jobs, using EC2 or Fargate under the hood, and optimizing cost with Spot Instances.

Architecture and Core Components

Batch is a regional service, orchestrating jobs via ECS clusters (EC2 or Fargate). It’s a scheduler atop compute resources. Key components:

- Jobs: Units of work—e.g., Python script in Docker—defined by Job Definitions.

- Job Definitions: Templates—e.g.,

my-job-def, 2 vCPUs, 4 GB,my-image:1.0. - Job Queues: Prioritized queues—e.g.,

high-priority—map to compute environments. - Compute Environments: Resource pools—e.g., EC2 Spot, Fargate—managed or unmanaged.

Jobs submit to queues, Batch schedules to environments—e.g., 100 jobs on 10 EC2 instances—retries failed tasks.

Features and Configuration

Priority: Queues ranked—e.g., 1 (high) vs. 10 (low). Retry: Configurable—e.g., 3 attempts on failure. Dependencies: Job B after A—e.g., ETL pipeline. Limits: 10,000 jobs/queue, 50 queues—soft limits.

Pricing

Free—pay for compute: EC2 ($0.0104/hr t3.micro), Fargate ($0.04048/vCPU-hr). Example: 10 jobs, 1 vCPU, 2 GB, 1 hr = $0.49 (Fargate)—Spot cuts to ~$0.15.

Networking and Scaling

VPC-based—jobs get ENIs (awsvpc). Scaling via:

- Compute Environment: Min/max vCPUs—e.g., 0-100, Spot 70%.

- Queue: Multi-queue priority—e.g.,

urgentgets first resources.

Example: 50 ETL jobs on 20 Spot instances—scales up/down dynamically.

Use Cases and Scenarios

ETL: Process 1 TB S3 data—e.g., 100 jobs, 2 vCPUs each. Simulations: Monte Carlo—e.g., 1,000 Spot tasks. Rendering: Video frames—e.g., 50 Fargate jobs.

Edge Cases and Gotchas

Spot Interruptions: 2-min warning—checkpoint to S3. Queue Backlog: Low priority starves—adjust ratios. Fargate Limits: 16 vCPUs max/task—split big jobs. Startup Lag: EC2 provisioning—pre-warm with min vCPUs.

Integration with Other Services

ECS: Runs tasks—e.g., Fargate jobs. S3: Input/output—e.g., s3://data. CloudWatch: Logs/metrics—e.g., job failures. IAM: Job roles—e.g., DynamoDB access. Step Functions: Orchestrate—e.g., multi-step batch.

Overview

AWS Elastic Beanstalk, launched in 2011, is a Platform-as-a-Service (PaaS) for deploying and managing applications without wrestling with infrastructure. It abstracts EC2, ASG, ELB, and more—upload code (e.g., Java, Python, Node.js), and Beanstalk handles provisioning, scaling, and monitoring. It’s less flexible than ECS or EC2 but faster for devs wanting “just deploy”—think Heroku on AWS, ideal for web apps or APIs.

Architecture and Core Components

Beanstalk is a regional service, orchestrating AWS resources under the hood. Key components:

- Application: Top-level—e.g.,

my-app—holds versions and environments. - Environment: Running instance—e.g.,

prod—EC2, ELB, ASG bundle. - Application Version: Code bundle—e.g.,

v1.0.zip—stored in S3. - Platform: Prebuilt stack—e.g.,

Python 3.9 on Amazon Linux 2.

Deploys to EC2 (single-instance or load-balanced)—e.g., t3.micro cluster in VPC. Managed updates patch OS/apps.

Features and Configuration

Platforms: Java, .NET, Node.js, etc.—e.g., Dockerrun.aws.json for Docker. Env Vars: Config—e.g., DB_HOST. Scaling: ASG rules—e.g., 1-4 instances, CPU > 70%. Limits: 10 apps, 75 versions—soft limits.

Pricing

Free—pay for resources: EC2 ($0.0104/hr t3.micro), ALB ($0.0225/hr), S3 ($0.023/GB). Example: 2 t3.micro, ALB, 24 hrs = $0.76/day. Free tier: 750 hrs/month EC2.

Networking and Scaling

VPC-based—public/private subnets. Scaling via:

- ASG: Auto-scales—e.g., 2-10 instances.

- ALB: Load balances—e.g.,

my-app.elasticbeanstalk.com.

Example: Node.js app scales 1-5 instances, ALB routes—zero config.

Use Cases and Scenarios

Web Apps: Flask API—e.g., app.zip to prod. Prototypes: Quick deploy—e.g., PHP site in 5 mins. Legacy: .NET migration—e.g., IIS on EC2.

Edge Cases and Gotchas

Limited Control: No raw EC2 access—use ECS for flexibility. Updates: Managed patches break customizations—test in dev. Scaling Lag: ASG cooldown—e.g., 300s. Costs: ALB adds $16/month—watch usage.

Integration with Other Services

EC2/ASG: Compute/scaling—e.g., t3.micro cluster. ALB: Traffic—e.g., HTTPS. S3: Code storage—e.g., v1.0.zip. CloudWatch: Logs/metrics—e.g., 5xx alarms. RDS: DB—e.g., MySQL env.

Monitoring and Management Services

Tools for observing, auditing, and managing AWS resources and workloads.

Overview

Amazon CloudWatch, launched in 2009, is AWS’s observability service, collecting, storing, and analyzing metrics, logs, and events from compute resources and beyond. It’s the pulse of your AWS environment, providing real-time insights into performance (via metrics), diagnostics (via logs), and automation (via alarms and events). While it integrates tightly with compute services like EC2, Lambda, and Fargate, its scope spans storage, databases, networking, and even custom apps—making it a central hub for monitoring and managing your cloud infrastructure. CloudWatch isn’t about running workloads but understanding them deeply, from system health to application behavior.

Architecture and Core Components

CloudWatch operates as a distributed, regional service, ingesting data from over 70 AWS services, custom applications, and on-premises systems via APIs or agents. Data is processed, stored, and made queryable, with outputs driving dashboards, alarms, or event-driven actions. Its architecture is serverless—AWS manages the backend, likely a mix of time-series databases (for metrics) and log aggregation systems. Key components include:

- Metrics: Time-series data points—e.g., EC2

CPUUtilization, LambdaInvocations—stored for 15 months with granularity from 1 second to 1 month. - Logs: Unstructured or semi-structured text—e.g., Lambda stdout, Apache logs—organized into Log Groups (e.g.,

/aws/lambda/myFunction) and Streams (per instance/shard). - Events: Real-time triggers—e.g., EC2 state change, S3 upload—routed via Event Rules to targets like Lambda or SNS.

- Alarms: Metric-based thresholds—e.g.,

CPUUtilization > 80% for 5 minutes—triggering SNS notifications or Auto Scaling.

Data flows in via integrations (e.g., Lambda auto-logs), the CloudWatch Agent (for EC2 memory/disk), or SDKs (custom metrics)—stored regionally with no cross-region aggregation unless you build it.

Features and Capabilities

CloudWatch’s versatility comes from its rich feature set, designed to monitor, troubleshoot, and automate:

- Metrics: Predefined from AWS (e.g., S3

BucketSizeBytes) or custom (e.g.,AppLatencyviaPutMetricData)—supports namespaces, dimensions (e.g., per-instance), and stats (avg, max). - Logs Insights: SQL-like queries on logs—e.g.,

fields @timestamp, @message | filter @message like /error/ | sort @timestamp desc—powered by a Presto-based engine for fast analysis. - Dashboards: Custom visualizations—e.g., graph EC2 CPU, Lambda errors, and S3 requests side-by-side—shareable across teams.

- Synthetics: Canary scripts (Node.js/Python) monitor endpoints—e.g., ping

/healthevery 5 minutes, alert on 500s—simulating user behavior. - Events and EventBridge: Rules match patterns (e.g.,

{"source": "aws.ec2"})—trigger Lambda, step functions, or SNS; EventBridge extends with custom buses. - X-Ray Integration: Links traces to metrics—e.g., Lambda latency tied to invocation count—for end-to-end debugging.

Retention: Metrics free for 15 months (1-second data downsampled after 3 hours); logs stored indefinitely (set expiration) or exported to S3 for archival—e.g., 90 days active, then Glacier.

Pricing

CloudWatch’s pricing is pay-as-you-go, tiered by feature:

- Metrics: Free for basic AWS metrics (e.g., EC2 CPU), $0.30/month per custom metric, $0.01/1,000 requests for high-res (1-second).

- Logs: $0.50/GB ingested, $0.03/GB-month stored—free tier 5 GB/month ingest+storage. Insights: $0.005/GB scanned.

- Alarms: $0.10/month (standard, 1-minute), $3/month (high-res, 1-second).

- Dashboards: $3/month per dashboard—first free.

- Synthetics: $0.001/run (10-second interval)—e.g., 5-minute canary = $0.288/day.

- Events: $1/1M events; custom EventBridge higher.

Example: 10 GB logs ingested, 5 custom metrics, 2 alarms = $6.70/month ($5 logs + $1.50 metrics + $0.20 alarms)—costs soar with verbose logging.

Use Cases and Scenarios

CloudWatch powers observability and automation:

- Performance Monitoring: EC2 CPU alarm notifies SNS at 90%—e.g., email ops for manual review.

- Auto-Scaling: Fargate tasks scale on

CPUUtilization > 70%—e.g., 3 to 10 containers dynamically. - Debugging: Query Lambda logs for

timeouterrors—e.g.,fields @timestamp | filter @message like /timeout/—pinpoint failures. - Scheduled Tasks: EventBridge triggers Lambda nightly—e.g., cleanup S3 temp files.

- Health Checks: Synthetics pings

/status—alerts on downtime.

Edge Cases and Gotchas

CloudWatch has quirks to master:

- Granularity Costs: 1-second metrics ($0.01/1,000) vs. free 1-minute—balance precision vs. budget.

- Log Explosion: Chatty apps (e.g., debug enabled) spike ingestion—filter at source (e.g., Lambda log level) or face $50+/month bills.

- No Auto-Delete: Logs persist unless expiration set—e.g., 30-day policy or S3 lifecycle—manual cleanup otherwise.

- Throttling: API limits (e.g., 1M

PutMetricData/month free)—batch writes or request quota increases. - Regional Scope: No native cross-region view—aggregate via custom Lambda or third-party tools.

Integration with Other Services

CloudWatch ties AWS together:

- EC2: Agent (

/opt/aws/amazon-cloudwatch-agent/) sends memory, disk—e.g.,MemoryUtilizationmissing from basic metrics. - Lambda: Auto-logs stdout—e.g.,

print("Error")hits/aws/lambda/myFunction—metrics likeDuration,Errors. - Fargate: Task metrics (CPU, memory)—e.g., scale ECS Service on

MemoryUtilization > 80%. - SNS: Alarm notifications—e.g., SMS on CPU spike; event targets—e.g., notify on S3 upload.

- S3: Export logs—e.g., 90-day retention then Glacier; metrics like

BucketSizeBytes. - X-Ray: Correlate traces—e.g., Lambda cold start latency with

Durationmetric.

Overview

AWS CloudTrail, launched in 2013, is an auditing and governance service that records API calls and account activity—e.g., who created an S3 bucket, when, and from where. It ensures compliance, security, and troubleshooting by logging every action across AWS services. From basics (trail setup) to advanced (multi-region trails, Insights), CloudTrail scales to millions of events/day with tamper-proof storage.

Architecture and Core Components

CloudTrail is a regional service—likely a log aggregator—delivering events to S3 and CloudWatch Logs. Key components:

- Trail: Config—e.g.,

my-trail—captures management, data, or Insights events. - Event: Record—e.g.,

{"eventName": "CreateBucket", "userIdentity": "alice"}—JSON log. - S3 Bucket: Sink—e.g.,

s3://my-trail-logs/—stores events, 11 9’s durability. - Insights: Anomaly—e.g., unusual API spikes—AI-driven detection.

Events flow: AWS API → CloudTrail → S3/Logs—~15m latency—99.9% SLA—tamper detection via digests.

Features and Configuration

Basics: Create—e.g., aws cloudtrail create-trail --name my-trail --s3-bucket-name my-trail-logs—Enable—e.g., aws cloudtrail start-logging—View—e.g., aws cloudtrail lookup-events. Intermediate: Multi-Region—e.g., --is-multi-region-trail—Org—e.g., aws cloudtrail create-trail --is-organization-trail—Data Events—e.g., aws cloudtrail put-event-selectors --data-resources S3—CloudWatch Logs—e.g., aws cloudtrail update-trail --cloud-watch-logs-role-arn .... Advanced: Insights—e.g., aws cloudtrail put-insight-selectors --insight-type ApiCallRateInsight—Encryption—e.g., KMS—Validation—e.g., aws cloudtrail validate-logs—Lake—e.g., aws cloudtrail create-event-data-store—Tags—e756.g., env=prod—Limits: 50 trails, 5 data resources—soft limits.

Pricing

Management Events: Free—1 trail/region—Additional—$2.00/100K events. Data Events: $0.10/100K—Insights—$0.35/100K analyzed—Lake—$0.028/GB ingested, $0.012/GB-month stored—e.g., 1M data events, 1M Insights, 10 GB Lake = $4.28/month. Free tier: 1 trail (management events)—forever. Example: 10M data events, 5M Insights, 100 GB Lake = $54.70/month ($10 + $1.75 + $42.95).

Monitoring and Scaling

Scales with API activity:

- Basic: Management—e.g., IAM changes—1M events/month.

- Intermediate: Data—e.g., S3 puts—10M events/month—Multi-Region—e.g., global audit.

- Advanced: Insights—e.g., 1M anomalies—Lake—e.g., 1 TB queried—100M events/month.

Example: Audit trail—my-trail (10M data events), Insights (spikes), Lake (long-term)—scales to 1B events/month.

Use Cases and Scenarios

Basic: Audit—e.g., who deleted EC2—Security—e.g., IAM changes. Intermediate: Compliance—e.g., PCI logs—Data—e.g., S3 access. Advanced: Insights—e.g., anomaly alerts—Lake—e.g., Athena queries.

Edge Cases and Gotchas

Latency: 15m—e.g., near-real-time—buffer apps—Data Events—e.g., 5 resources max—split trails. Cost: 1B data events—e.g., $1,000/month—limit selectors—Insights—e.g., noisy—tune thresholds. Lake: Query cost—e.g., 1 TB = $28—optimize—Retention—e.g., infinite—S3 lifecycle.

Integration with Other Services

S3: Storage—e.g., s3://logs/—Athena: Query—e.g., Lake tables—CloudWatch: Logs—e.g., real-time—Events—e.g., SNS trigger. Lambda: Process—e.g., parse events—Config: Rules—e.g., compliance check—IAM: Audit—e.g., policy changes.

Overview

AWS Config, launched in 2014, is a configuration management and compliance service that tracks resource changes—e.g., EC2 tags, S3 encryption—over time. It provides a historical view and rule-based evaluations for governance and auditing. From basics (resource tracking) to advanced (multi-account conformance, remediation), Config scales to thousands of resources with continuous monitoring.

Architecture and Core Components

Config is a regional service—likely a state store + event processor—recording snapshots and changes. Key components:

- Resource: Tracked—e.g.,

AWS::EC2::Instance—config history. - Rule: Policy—e.g.,

s3-bucket-public-read-prohibited—compliance check. - Snapshot: State—e.g., JSON of EC2 at T1—stored in S3.

- Aggregator: Multi-account—e.g., Org-wide view—centralized data.

Changes flow: Resource → Config → S3/CloudWatch—real-time via Streams—99.9% SLA—11 9’s durability with S3.

Features and Configuration

Basics: Enable—e.g., aws configservice start-configuration-recorder --recorder-name my-recorder—Track—e.g., aws configservice describe-configuration-recorders—Rule—e.g., aws configservice put-config-rule --config-rule-name my-rule. Intermediate: S3 Delivery—e.g., --delivery-channel S3—History—e.g., aws configservice get-resource-config-history --resource-id i-123—Remediation—e.g., aws configservice put-remediation-configurations --auto-remediate. Advanced: Multi-Account—e.g., aws configservice put-aggregator --aggregator-name my-agg—Conformance—e.g., aws configservice put-conformance-pack --template-s3-uri s3://my-template.yaml—Streams—e.g., aws configservice subscribe-to-resource-changes—Tags—e.g., env=prod—Limits: 100 rules, 50 aggregators—soft limits.

Pricing

Recording: $0.003/resource-month—Rules—$2.00/rule-month—Evaluations—$0.0001/eval—e.g., 100 resources, 10 rules, 1M evals = $32.30/month. Aggregator: Free—Conformance—$0.001/resource-eval—S3—$0.023/GB-month—e.g., 1K resources, 1 GB = $1.02/month. Free tier: None. Example: 1K resources, 50 rules, 10M evals, 1 TB conformance = $1,523/month ($3 + $100 + $1 + $419).

Monitoring and Scaling

Scales with resources:

- Basic: Track—e.g., 10 EC2—Rules—e.g., 5 checks—1K events/month.

- Intermediate: History—e.g., 100 resources—Remediation—e.g., SSM—10K events/month.

- Advanced: Aggregator—e.g., 10 accounts—Conformance—e.g., 1K resources—1M events/month.

Example: Compliance—my-config (1K resources), 50 rules, Org aggregator—scales to 10K resources.

Use Cases and Scenarios

Basic: Inventory—e.g., EC2 list—Compliance—e.g., encryption check. Intermediate: Change—e.g., tag drift—Remediation—e.g., fix S3 ACLs. Advanced: Multi-Account—e.g., Org audit—Conformance—e.g., CIS benchmarks.

Edge Cases and Gotchas

Recording: Delay—e.g., 10m—near-real-time—Unsupported—e.g., some global services—check docs. Rules: Cost—e.g., 1K rules = $2K/month—optimize—Eval—e.g., 1B = $100—limit scope. Conformance: Complexity—e.g., YAML errors—validate—Cost—e.g., 1M resources = $1K—sample audits.

Integration with Other Services

S3: Snapshots—e.g., s3://config/—CloudTrail: Events—e.g., API context—CloudWatch: Metrics—e.g., ConfigRulesCompliance. SSM: Remediation—e.g., AWS-FixS3Encryption—Lambda: Custom—e.g., rule logic—IAM: Audit—e.g., role changes.

Overview

Amazon EventBridge (formerly CloudWatch Events), relaunched in 2019, is a serverless event bus for routing events—e.g., EC2 state changes, custom app events—to targets like Lambda or SNS. It enables event-driven architectures with decoupled systems. From basics (scheduled rules) to advanced (Schema Registry, Archive), EventBridge scales to billions of events/month with low latency.

Architecture and Core Components

EventBridge is a regional, serverless service—likely a pub/sub system—ingesting events via APIs or integrations. Key components:

- Event: Payload—e.g.,

{"source": "aws.ec2", "detail-type": "EC2 Instance State-change"}—JSON. - Rule: Filter—e.g.,

{"source": ["aws.ec2"]}—matches events to targets. - Target: Destination—e.g., Lambda, SQS—processes events.

- Bus: Channel—e.g.,

defaultormy-bus—routes events, custom or partner.

Events flow: Source → Bus → Rule → Target—~100ms latency—99.9% SLA—reliable delivery with retries.

Features and Configuration

Basics: Rule—e.g., aws events put-rule --name my-rule --event-pattern '{"source": ["aws.s3"]}'—Target—e.g., aws events put-targets --rule my-rule --targets Id=1,Arn=arn:aws:lambda:...—List—e.g., aws events list-rules. Intermediate: Schedule—e.g., --schedule-expression "rate(5 minutes)"—Custom—e.g., aws events put-events --entries '{"Source": "my.app"}'—DLQ—e.g., SQS for retries. Advanced: Schema Registry—e.g., aws schemas create-schema --name my-schema—Archive—e.g., aws events create-archive --archive-name my-archive—Replay—e.g., aws events start-replay—Bus—e.g., aws events create-event-bus --name my-bus—Partner—e.g., SaaS events—Encryption—e.g., KMS—Limits: 100 rules/bus, 5 targets/rule—soft limits.

Pricing

Events: $1.00/1M—Custom/Partner—$1.00/1M—Schema—$0.39/1M lookups—Archive—$0.03/GB-month—e.g., 1M events, 1M lookups, 10 GB archive = $2.69/month. Free tier: 100K events—forever (state change only). Example: 10M custom events, 5M lookups, 100 GB archive = $24.95/month ($10 + $1.95 + $13).

Monitoring and Scaling

Scales with event volume:

- Basic: Schedule—e.g., 1K Lambda triggers—AWS—e.g., S3 events—1M/month.

- Intermediate: Custom—e.g., 10M app events—DLQ—e.g., failed retries—10M/month.

- Advanced: Archive—e.g., 1 TB stored—Replay—e.g., 100M reprocessed—Bus—e.g., 1B/month.

Example: Workflow—my-bus (10M custom events), Schema (typed), Archive (replay)—scales to 10B/month.

Use Cases and Scenarios

Basic: Automation—e.g., EC2 stop—Schedule—e.g., nightly job. Intermediate: App—e.g., order events—Retry—e.g., DLQ for failures. Advanced: Schema—e.g., typed events—Archive—e.g., audit replay—Partner—e.g., SaaS integration.

Edge Cases and Gotchas

Latency: 100ms—e.g., not real-time—buffer apps—Throttling—e.g., 10K puts/sec—batch put-events. Cost: 1B events—e.g., $1,000/month—filter wisely—Archive—e.g., 1 PB = $30K—lifecycle to S3. Schema: Overhead—e.g., lookup lag—cache locally—Replay—e.g., 90d limit—plan retention.

Integration with Other Services

Lambda: Target—e.g., process events—S3: Trigger—e.g., uploads—CloudWatch: Metrics—e.g., Invocations. SNS/SQS: Notify—e.g., fan-out—CloudTrail: Audit—e.g., API events—Config: Changes—e.g., resource updates—Step Functions: Orchestrate—e.g., workflows.

Storage Services

Scalable and durable storage solutions for objects, blocks, and file systems in AWS.

Overview

Amazon Simple Storage Service (S3) is an object storage service designed for virtually unlimited scalability, exceptional durability (99.999999999%, or 11 nines), and high availability (99.99% for Standard class). It’s a foundational AWS service, launched in 2006, built to store and retrieve any amount of data at any time, from anywhere on the web. Unlike block storage (e.g., EBS) or file systems (e.g., EFS), S3 uses a flat, key-value structure where data is stored as objects in buckets, identified by unique keys. This simplicity enables use cases ranging from backups and archives to static website hosting (like this page!), big data lakes, and content delivery.

Architecture and Core Components

S3’s architecture is distributed and serverless, abstracting physical infrastructure from users. Data is stored across multiple Availability Zones (AZs) within a region by default, ensuring resilience without user intervention. Here’s how it breaks down:

- Buckets: Top-level containers, analogous to folders but flat in structure. Each bucket has a globally unique name (e.g., "my-bucket-123") and is tied to a region (e.g., us-east-1). Buckets don’t nest; they’re a single namespace across all AWS accounts, hence the uniqueness requirement.

- Objects: The data itself—files, images, etc.—stored with a key (e.g., "photos/vacation.jpg"), metadata (e.g., content-type), and optional tags. Keys can mimic hierarchy with slashes (e.g., "folder/subfolder/file.txt"), but it’s a logical illusion; S3 treats it as one long string.

- Storage Backend: AWS doesn’t disclose specifics, but S3 replicates data across at least three AZs using a distributed system (likely a custom key-value store optimized for durability). Erasure coding and replication ensure data survives hardware failures.

Storage Classes

S3 offers multiple storage classes, each balancing cost, access speed, and durability. Understanding these is critical for cost optimization and performance tuning:

- S3 Standard: Default class for frequent access. 99.99% availability, millisecond latency, $0.023/GB/month (us-east-1). Use for active content like app data or websites.

- S3 Intelligent-Tiering: Auto-moves objects between frequent and infrequent tiers based on access patterns. Adds a small monitoring fee ($0.0025/1,000 objects) but saves manual effort. Ideal for unpredictable workloads.

- S3 Standard-IA (Infrequent Access): Lower cost ($0.0125/GB) with a 30-day minimum storage charge and retrieval fee ($0.01/GB). Suits backups accessed occasionally.

- S3 One Zone-IA: Cheaper ($0.01/GB) but stores in one AZ (99.5% availability), risking data loss if the AZ fails. Use for secondary copies or non-critical data.

- S3 Glacier: Archival storage ($0.004/GB) with retrieval times from minutes to hours. Perfect for compliance data; retrieval costs vary (e.g., $0.02/GB expedited).

- S3 Glacier Deep Archive: Lowest cost ($0.00099/GB), 12-hour retrieval default. For rarely accessed data like legal records; 180-day minimum charge applies.

Data transitions between classes via Lifecycle Policies—e.g., move logs to Glacier after 90 days, then Deep Archive after a year—automating cost savings.

Data Consistency and Access

S3 provides strong read-after-write consistency for PUTs of new objects (you upload, it’s immediately readable). However, updates or deletes (overwrites) are eventually consistent—there’s a brief window (seconds) where an old version might be returned due to replication lag across AZs. This impacts designs needing instant consistency (e.g., avoid S3 for a database’s primary store). Access is via:

- HTTP/HTTPS: RESTful API (GET, PUT, DELETE) or SDKs. URLs like

s3.amazonaws.com/my-bucket/keyor regional endpoints (e.g.,my-bucket.s3.us-east-1.amazonaws.com). - Pre-signed URLs: Temporary access links (e.g., 5-minute expiration) for private objects—great for secure file sharing.

- CLI/UI: AWS CLI (

aws s3 cp) or Console for manual operations.

Security and Access Control

S3 is private by default—new buckets and objects require explicit permissions. Security layers include:

- IAM Policies: User/service-level access (e.g., allow EC2 to read

my-bucket/*). Example:{"Effect": "Allow", "Action": "s3:GetObject", "Resource": "arn:aws:s3:::my-bucket/*"}. - Bucket Policies: Bucket-wide rules (e.g., public read:

{"Effect": "Allow", "Principal": "*", "Action": "s3:GetObject"}). Can enforce MFA or IP restrictions. - ACLs: Legacy, less granular—object/bucket ownership (e.g., grant write to another account).

- Encryption: Server-side (SSE-S3 AES-256, SSE-KMS for key management, SSE-C for custom keys) or client-side. Mandatory for compliance in regulated industries.

- Block Public Access: Account/bucket-level toggle to prevent accidental exposure—SAA-C03 emphasizes this.

Example: Hosting this site requires a public bucket policy, but sensitive data might use KMS with IAM roles for Lambda access.

Features and Capabilities

S3’s versatility comes from advanced features:

- Versioning: Tracks object changes—e.g., overwrite

file.txt, and prior versions remain accessible via version IDs. Enables recovery from accidental deletes (usex-amz-version-idin GET). - Lifecycle Policies: Automate transitions (e.g., Standard → Glacier after 90 days) or expiration (delete after 365 days). Saves costs on aging data.

- Replication: Cross-Region (CRR) or Same-Region (SRR)—e.g., replicate

us-east-1tous-west-2for disaster recovery. Requires versioning; rules filter by prefix/tags. - Events: Trigger Lambda, SNS, or SQS on actions (e.g.,

s3:ObjectCreated:*)—e.g., resize images on upload. - Transfer Acceleration: Uses CloudFront’s edge locations for faster uploads over long distances—enable via bucket settings.

- Multipart Upload: Splits large files (e.g., 10 GB) into chunks for parallel upload—resumes on failure. API-driven (

Initiate,UploadPart,Complete). - Static Website Hosting: Serve HTML/CSS/JS (like this page) with custom domains via CloudFront. Set

index.htmlas the index document.

Pricing Model

S3’s pay-as-you-go pricing includes:

- Storage: $0.023/GB (Standard), down to $0.00099/GB (Deep Archive). Free tier: 5 GB/month.

- Requests: $0.005/1,000 GETs, $0.0004/1,000 PUTs—costly for high-frequency operations.

- Data Transfer: Free in (upload), $0.09/GB out to internet (after 100 GB free tier). Region-to-region varies (e.g., $0.02/GB us-east-1 to us-west-2).

- Extras: Retrieval fees (e.g., $0.01/GB Standard-IA), Intelligent-Tiering monitoring ($0.0025/1,000 objects).

Example: Hosting 10 GB on Standard costs $0.23/month, but 1M GETs adds $5—optimize with CloudFront caching.

Use Cases and Scenarios

S3’s flexibility shines in real-world applications:

- Static Websites: Host this site—set bucket public, enable hosting, point to

index.html. Add CloudFront for HTTPS and speed. - Data Lakes: Store petabytes of raw data (e.g., logs, IoT streams) with Athena for SQL queries—use prefixes (e.g.,

year=2025/month=03/) for partitioning. - Backup/DR: Replicate critical files across regions with CRR—e.g., nightly snapshots from EC2 to S3, then to

us-west-2. - Content Delivery: Pair with CloudFront—e.g., serve 4K videos with low latency, S3 as origin.

Edge Cases and Gotchas

Deep understanding requires knowing S3’s quirks:

- Eventual Consistency: Overwrites might show old data briefly—use unique keys (e.g., timestamps) for critical updates.

- Request Rate: S3 auto-scales but throttles at ~3,500 PUTs/sec or 5,500 GETs/sec per prefix—spread keys (e.g., hash prefixes) for high throughput.

- Versioning Overhead: Enabled buckets accumulate versions—delete old ones manually or via lifecycle to control costs.

- Cross-Region Latency: CRR isn’t instant (minutes)—not real-time DR.

Integration with Other Services

S3 integrates tightly with AWS:

- Lambda: Process uploads (e.g., thumbnail generation)—S3 event triggers Lambda.

- CloudFront: Cache S3 objects at edge locations—reduces GET costs and latency.

- Athena: Query CSV/JSON in S3 without a database—e.g., analyze logs in

s3://logs/. - Snowball: Physically transfer terabytes to S3—beats slow uploads for migrations.

Overview

Amazon Elastic Block Store (EBS) provides persistent block storage for EC2 instances, acting as virtual hard drives with low-latency access since its launch in 2008. Unlike S3’s object storage or EFS’s file system approach, EBS delivers raw block-level storage—think of it as a SAN (Storage Area Network) in the cloud, optimized for databases, boot volumes, and transactional workloads. It offers durability (99.999% single-region) and flexibility—resize, snapshot, or detach volumes without downtime—making it a cornerstone for compute-intensive applications needing consistent IOPS.

Architecture and Core Components

EBS volumes reside in a single Availability Zone (AZ), replicated within that AZ’s storage fabric for durability—not across AZs (use snapshots for multi-AZ DR). Data is stored in blocks (e.g., 4 KB chunks), attached to EC2 instances over a high-speed network (not local disk), leveraging AWS’s Nitro System for performance. Key components:

- Volumes: Block devices (e.g., 1 GB to 16 TB) attached to one EC2 instance (or multiple with Multi-Attach)—e.g.,

/dev/xvdaas root. - Snapshots: Incremental backups stored in S3—e.g., snapshot a 100 GB volume, only changed blocks since last snapshot are saved.

- Storage Backend: AWS uses SSDs or HDDs (type-dependent), replicated within AZ—erasure coding ensures data survives hardware faults.

Volumes are network-attached via ENIs, with latency in milliseconds—faster than S3, slower than Instance Store.

Volume Types and Performance

EBS offers SSD and HDD volume types, each tuned for specific workloads—balancing IOPS (I/O operations per second), throughput (MB/s), and cost:

- gp3 (General Purpose SSD): 3,000 IOPS base (up to 16,000), 125 MB/s base (up to 1,000), $0.08/GB—default for most apps, cost-effective.

- gp2 (Legacy SSD): 3 IOPS/GB (3,000-16,000), 250 MB/s max, $0.10/GB—older, less flexible than gp3.

- io2 (Provisioned IOPS SSD): Up to 256,000 IOPS, 4,000 MB/s, 99.999% durability, $0.125/GB—high-performance DBs (e.g., Oracle).

- io1 (Legacy PIOPS): Up to 64,000 IOPS, 1,000 MB/s, $0.125/GB—older io2 alternative.

- st1 (Throughput Optimized HDD): 500 MB/s max, 40-500 IOPS, $0.045/GB—big data, logs.

- sc1 (Cold HDD): 250 MB/s max, 12-250 IOPS, $0.015/GB—infrequent access, archives.

Performance scales with size (except io2)—e.g., 1 TB gp3 = 3,000 IOPS base, burst to 16,000. Multi-Attach (io2 only) allows clustering—e.g., shared volume for HA DBs.

Data Management and Access

EBS volumes attach to EC2 via block device mappings—e.g., /dev/sdb—formatted with filesystems (ext4, NTFS). Access is:

- Direct: EC2 mounts volumes—e.g.,

mount /dev/xvdf /data—low-latency reads/writes. - Snapshots: Point-in-time copies in S3—restore to new volumes or share across regions/accounts.

- Encryption: AES-256 via KMS—enabled per volume or snapshot, seamless to EC2.

Snapshots are incremental—first full, then deltas—e.g., 100 GB volume, 10 GB changed = 10 GB stored. Restore lazy-loads data—initial reads slower until fetched from S3.

Security and Access Control

EBS is private to your VPC—security is layered:

- IAM: Controls volume/snapshot actions—e.g.,

{"Action": "ec2:CreateVolume", "Resource": "*"}. - Encryption: KMS keys (default or custom)—e.g.,

aws/ebskey auto-applied, or rotate custom keys. - Snapshot Sharing: Share encrypted snapshots—recipient needs KMS key access.

- Resource Policies: Restrict snapshot access—e.g., specific accounts only.

Example: Encrypt a DB volume with KMS, share snapshot with DR account—secure and compliant.

Features and Capabilities

EBS enhances block storage with advanced features:

- Resize: Increase size/IOPS on-the-fly—e.g., 100 GB gp3 to 200 GB, extend filesystem live.

- Snapshots: Backup/restore—e.g., nightly cron job snapshots to S3, cross-region copy for DR.

- Multi-Attach: io2 volumes shared across instances—e.g., clustered PostgreSQL in one AZ.

- Fast Snapshot Restore (FSR): Pre-warms snapshots—e.g., instant restore for 10 volumes, $0.75/hr per FSR.

- Elastic Volumes: Change type—e.g., gp2 to gp3—minimal downtime.

Pricing Model

EBS pricing varies by type:

- Storage: $0.08-$0.125/GB (SSD), $0.015-$0.045/GB (HDD)—e.g., 100 GB gp3 = $8/month.

- IOPS: io2/io1 $0.065/PIOPS-month—e.g., 10,000 IOPS = $650/month.

- Snapshots: $0.05/GB-month—incremental, e.g., 10 GB changed = $0.50/month.

- FSR: $0.75/hr per snapshot—e.g., 2 FSRs = $36/day.

No free tier—costs tied to EC2 usage. Example: 200 GB gp3 (3,000 IOPS) + 20 GB snapshot = $17/month.

Use Cases and Scenarios

EBS powers persistent workloads:

- Boot Volumes: EC2 root (8 GB gp3)—e.g., Amazon Linux AMI.

- Databases: io2 for MySQL (10,000 IOPS)—e.g., transactional e-commerce DB.

- DR: Snapshots to S3, restore in another region—e.g., nightly backup of 1 TB volume.

- Big Data: st1 for Hadoop—e.g., 5 TB logs with 500 MB/s throughput.

Edge Cases and Gotchas

Single AZ: AZ failure loses volume—snapshot to S3 for DR. Performance: gp3 burst limits—e.g., 16,000 IOPS max, io2 for sustained needs. Snapshot Restore: Lazy-loading slows first access—use FSR for speed. Multi-Attach: Same AZ only—cross-AZ needs app-level sync.

Integration with Other Services

EC2: Primary storage—e.g., root + data volumes. S3: Snapshots stored—e.g., copy to us-west-2. CloudWatch: Metrics (e.g., VolumeReadOps)—alarm on IOPS. Data Lifecycle Manager (DLM): Automate snapshots—e.g., daily at 2 AM.

Overview

Amazon Elastic File System (EFS), launched in 2016, is a fully managed, scalable file storage service designed for shared access across multiple EC2 instances, Lambda functions, or on-premises servers. Unlike EBS’s block storage or S3’s object storage, EFS provides a POSIX-compliant file system (NFSv4), perfect for applications needing a traditional directory structure—think shared configs, content management, or big data workloads. It scales automatically (petabytes), offers high availability (multi-AZ), and simplifies management—no provisioning or capacity planning required.

Architecture and Core Components

EFS is a regional service, storing data across multiple AZs within a region for durability (11 nines) and availability (99.99%). It uses a distributed file system (likely NFS-based) with a control plane managing metadata and a data plane handling file I/O. Key components:

- File Systems: The top-level resource—e.g.,

fs-12345678—tied to a VPC, with mount targets in subnets. - Mount Targets: ENI-based endpoints per AZ—e.g.,

fs-12345678.efs.us-east-1.amazonaws.com—clients connect via NFS. - Data Storage: Elastic—grows/shrinks with usage, no fixed size—e.g., 1 GB to 10 TB seamlessly.

Data replicates across AZs—writes sync immediately (strong consistency), reads are low-latency via regional caching. Access is network-based, requiring VPC connectivity.

Performance Modes and Storage Classes

EFS offers performance tailored to latency and throughput:

- General Purpose: Low-latency (ms), up to 35,000 IOPS—default for web servers, CMS, or dev environments. Use CloudWatch (

BurstCreditBalance) to monitor. - Max I/O: Higher throughput (GB/s), unlimited IOPS—e.g., big data analytics, media processing—sacrifices some latency for scale.

Storage classes optimize cost:

- Standard: Frequent access, $0.30/GB-month—e.g., active files.

- Infrequent Access (IA): $0.025/GB-month, $0.01/GB retrieval—e.g., old logs. Lifecycle policies move files after 30 days.

- One Zone: Single AZ (99.9% availability), $0.16/GB Standard, $0.0133/GB IA—cheaper, less resilient.

Baseline throughput scales with size—e.g., 100 MB/s per TB (burst to 500 MB/s)—Max I/O removes limits.

Data Management and Access

EFS mounts as a filesystem via NFSv4.1—e.g., mount -t nfs4 fs-12345678.efs.us-east-1.amazonaws.com:/ /mnt/efs. Access is:

- EC2: Mount across AZs—e.g., 10 instances share

/data—concurrent reads/writes. - Lambda: Access via VPC—e.g., process files in

/mnt/efs/input. - On-Prem: VPN/Direct Connect—e.g., mount to local servers.

- Backups: AWS Backup—e.g., daily snapshots with 35-day retention.

Strong consistency—writes visible instantly across mounts. Metadata (e.g., permissions) managed via POSIX—e.g., chmod 755.

Security and Access Control

EFS secures data in transit and at rest:

- IAM: Controls API actions—e.g.,

{"Action": "elasticfilesystem:CreateFileSystem"}—plus mount permissions via VPC. - Encryption: AES-256—KMS at rest (default), TLS in transit (enforced).

- Security Groups: Mount target firewall—e.g., allow NFS port 2049 from EC2 subnet.

- POSIX Permissions: File-level access—e.g.,

user1:rw,group2:r.

Example: Encrypt EFS for a shared CMS—EC2 mounts via TLS, IAM restricts creation.

Features and Capabilities

EFS enhances file storage:

- Elastic Scaling: No provisioning—e.g., 1 GB to 1 PB without downtime.

- Lifecycle Management: Move to IA—e.g.,

30-day policysaves 90% on cold data. - Backup: AWS Backup—e.g., incremental daily snapshots to S3.

- Access Points: Restrict mounts—e.g.,

/appsfor app A,/datafor app B—enforce paths/permissions. - Burst Credits: General Purpose bursts to 500 MB/s—credits accrue when idle.

Pricing Model

EFS pricing is usage-based:

- Storage: $0.30/GB-month (Standard), $0.025/GB-month (IA)—One Zone $0.16/$0.0133.

- Requests: Included—e.g., reads/writes free beyond throughput.

- Throughput: Burst free; Provisioned Throughput $6/MB/s-month—e.g., 10 MB/s = $60/month.

- Backup: $0.05/GB-month via AWS Backup.

Example: 100 GB Standard, 10 GB IA = $30.25/month—add $60 for 10 MB/s provisioned. Free tier: 5 GB/month Standard.

Use Cases and Scenarios

EFS excels in shared storage:

- CMS: WordPress on EC2—e.g.,

/wp-contentshared across 5 instances. - Big Data: Spark on Max I/O—e.g., 10 TB datasets, 1 GB/s throughput.

- Dev Environments: Code repos—e.g.,

/gitmounted by 20 devs. - Serverless: Lambda processes

/efs/input—e.g., batch file jobs.

Edge Cases and Gotchas

Burst Limits: General Purpose credits deplete—e.g., 1 TB = 100 MB/s base, burst to 500 MB/s—switch to Max I/O for heavy loads. Latency: Milliseconds—not block-level (EBS)—avoid latency-sensitive DBs. One Zone: AZ failure loses data—use multi-AZ for critical apps. Cost: Expensive vs. S3—e.g., 1 TB = $300/month vs. $23.

Integration with Other Services

EC2: Multi-mount—e.g., /data across AZs. Lambda: File processing—e.g., read /efs/logs. Fargate: Persistent storage—e.g., ECS tasks share /configs. CloudWatch: Metrics (e.g., DataReadBytes)—alarm on credit depletion. AWS Backup: Snapshots—e.g., nightly to S3.

Overview

Amazon FSx for Lustre, introduced in 2018, is a fully managed, high-performance file storage service built on the open-source Lustre filesystem, optimized for fast, parallel access to large datasets. Unlike FSx for Windows (SMB-based) or EFS (general-purpose NFS), FSx for Lustre targets high-performance computing (HPC), machine learning (ML), and big data workloads needing massive throughput (100s of GB/s) and low latency (sub-millisecond). It integrates tightly with S3, enabling seamless data movement—e.g., process petabytes from S3, write results back—making it a powerhouse for compute-intensive, temporary storage needs.

Architecture and Core Components

FSx for Lustre runs in a single region, with data stored in one AZ (Persistent) or ephemeral (Scratch) configurations. It leverages Lustre’s distributed architecture—splitting metadata (MDS) and data (OSTs) across servers for parallelism. Key components:

- File Systems: The Lustre instance—e.g.,

fs-abcdef12—with a capacity (1.2 TB-100s of TB). - Mount Targets: VPC endpoints—e.g.,

fs-abcdef12.fsx.us-east-1.amazonaws.com—clients mount via Lustre protocol. - Storage Backend: SSD-based, optimized for IOPS and throughput—replicated within AZ (Persistent) or not (Scratch).

Data syncs with S3 optionally—e.g., import on creation, export on demand. Access is VPC-only, via ENIs.

Performance and Storage Options

FSx for Lustre offers two deployment types:

- Scratch: Max performance (200 MB/s/TB base, burst to GB/s), no replication—e.g., ML training, temporary data. Data lost on failure.

- Persistent: Durable (11 nines), 50-200 MB/s/TB base—e.g., long-running HPC. HA option with standby in another AZ (failover in minutes).

Throughput scales with size—e.g., 6 TB = 1.2 GB/s base (Scratch)—IOPS up to 100,000s. Lustre stripes data across OSTs—e.g., 1 MB stripe size for large files.

Data Management and Access

Mount via Lustre client—e.g., mount -t lustre fs-abcdef12@tcp:/fsx /mnt/lustre on EC2. Access is:

- EC2: Parallel mounts—e.g., 100 instances read

/mnt/lustre/dataat GB/s. - S3 Integration: Link to bucket—e.g.,

aws fsx update-data-repository-association—import/export files. - Backups: Persistent only—daily, 0-35 days retention—e.g., restore to new FS.

POSIX-compliant—e.g., ls -l works—strong consistency across clients.

Security and Access Control

FSx for Lustre secures via:

- IAM: API control—e.g.,

{"Action": "fsx:CreateFileSystem"}. - Encryption: KMS at rest (default), in transit—e.g., Lustre client encrypts.

- Security Groups: VPC firewall—e.g., allow Lustre ports (988, 1018-1023).

- POSIX Permissions: File-level—e.g.,

chmod 644—no AD integration.

Example: Encrypt ML dataset—EC2 mounts via VPC, IAM restricts access.

Features and Capabilities

S3 Sync: Bidirectional—e.g., datarepo link imports S3 bucket, exports results. HA: Persistent multi-AZ—e.g., failover in 10s of seconds. Backups: Persistent only—e.g., PITR from yesterday. Striping: Customizable—e.g., 4 OSTs for 4 GB/s reads.

Pricing Model

Storage: $0.14/GB-month (Persistent), $0.0133/GB-month (Scratch)—e.g., 6 TB Persistent = $840/month. Throughput: Included—e.g., 1.2 GB/s free at 6 TB. Backups: $0.05/GB-month—e.g., 1 TB = $50/month. S3 Requests: Standard S3 rates—e.g., $0.005/1,000 GETs.

Use Cases and Scenarios

ML Training: 10 TB dataset—e.g., 100 EC2 GPUs read at 2 GB/s, export to S3. HPC: Simulations—e.g., 1 PB Scratch for weather modeling. Media Processing: 4K rendering—e.g., 50 TB Persistent, HA.

Edge Cases and Gotchas

Scratch Risk: No durability—save to S3 often. Cost: High for persistence—e.g., 10 TB = $1,400/month vs. S3 $230. Single AZ (Scratch): Failure loses data—use Persistent for critical. S3 Sync Latency: Minutes, not real-time—plan workflows.

Integration with Other Services

EC2: HPC clusters—e.g., /mnt/lustre. S3: Data lake—e.g., import s3://data, export results. CloudWatch: Metrics (e.g., FreeDataStorageCapacity)—alarm on space. Fargate/EKS: Mount for containerized ML—e.g., /lustre/input.

Overview

Amazon FSx for Windows File Server, launched in 2018, is a fully managed Windows-based file storage service, delivering SMB (Server Message Block) file shares for Windows-centric workloads. Unlike EFS’s POSIX focus or S3’s object model, FSx supports NTFS, Active Directory (AD) integration, and Windows permissions—ideal for enterprise apps like SQL Server, IIS, or file shares needing Windows compatibility. It offers HA (multi-AZ), backups, and encryption, abstracting the complexity of managing Windows file servers.

Architecture and Core Components

FSx runs on AWS’s infrastructure, emulating a Windows Server with SMB (2.0-3.1.1). Data is stored in a single region, with options for single-AZ or multi-AZ deployments:

- File Systems: The storage unit—e.g.,

fs-98765432—with a capacity (8 GB-100 TB) and throughput. - File Shares: SMB endpoints—e.g.,

\\fs-98765432.file.fsx.us-east-1.amazonaws.com\share—mounted by clients. - Storage Backend: SSD or HDD, replicated within/between AZs—e.g., multi-AZ syncs primary to standby.

Data is durable (11 nines)—multi-AZ uses synchronous replication; single-AZ relies on AZ-internal redundancy. Access requires VPC and AD (AWS Managed AD or on-prem).

Performance and Storage Options

FSx performance scales with size and type:

- SSD: Low-latency, 12-2,048 MB/s, $0.13/GB-month—e.g., app data, DBs.

- HDD: Higher capacity, 12-80 MB/s, $0.013/GB-month—e.g., backups, archives.

Throughput: 8 MB/s base per TB (SSD), burst to 2,048 MB/s—provisioned option (e.g., 512 MB/s) for high demand. IOPS scale automatically—e.g., 3 IOPS/GB for SSD.

Data Management and Access

FSx mounts via SMB—e.g., net use Z: \\fs-98765432\share on Windows. Access is:

- EC2: Windows instances mount shares—e.g.,

Z:\datafor IIS. - On-Prem: VPN/Direct Connect—e.g., AD-joined servers access.

- Backups: Daily automatic—e.g., 7-day retention, PITR (point-in-time recovery).

- Data Deduplication: Reduces redundancy—e.g., save 30% on repetitive files.

NTFS permissions—e.g., Administrators:Full, Users:Read—managed via AD. Strong consistency across mounts.

Security and Access Control

FSx integrates with Windows security:

- AD: Required—AWS Managed AD or on-prem—e.g.,

corp.example.comusers/groups. - Encryption: KMS at rest, SMB encryption in transit—e.g., SMB 3.0+ enforces.

- IAM: API access—e.g.,

{"Action": "fsx:CreateFileSystem"}. - Security Groups: VPC firewall—e.g., allow SMB ports 445, 135-139.

- ACLs: NTFS-level—e.g.,

user1:rw, inherited from parent.

Example: AD-joined EC2 mounts encrypted share—only Domain Users access.

Features and Capabilities

FSx enhances Windows storage:

- Multi-AZ: HA—e.g., failover in 60s, 99.99% availability.

- Backups: Automated or manual—e.g., 35-day retention, restore to new FS.

- Deduplication: Enabled per share—e.g., compress repetitive docs.

- Shadow Copies: Previous versions—e.g., recover deleted files from 2 PM snapshot.

- Quota Management: Per-user limits—e.g., 10 GB/user.

Pricing Model

FSx pricing includes:

- Storage: $0.13/GB-month (SSD), $0.013/GB-month (HDD)—e.g., 1 TB SSD = $130/month.

- Throughput: $2.20/MB/s-month provisioned—e.g., 512 MB/s = $1,126/month.

- Backups: $0.05/GB-month—e.g., 100 GB = $5/month.

- Requests: Free—e.g., SMB reads/writes included.

Example: 1 TB SSD, 64 MB/s, 50 GB backup = $162.50/month ($130 + $30 + $2.50)—no free tier.

Use Cases and Scenarios

FSx powers Windows workloads:

- File Shares: AD-integrated storage—e.g.,

\\fsx\deptfor 100 users. - SQL Server: Persistent storage—e.g., 2 TB SSD for DB files.

- IIS: Web content—e.g.,

Z:\wwwrootacross 5 instances. - DR: Multi-AZ + backups—e.g., failover + restore in us-east-1b.

Edge Cases and Gotchas

AD Dependency: No AD, no access—setup required. Cost: High vs. EFS—e.g., 1 TB SSD = $130 vs. $300 for EFS. Multi-AZ Failover: 60s delay—plan app tolerance. Throughput: Base scales slowly—provision for peaks.

Integration with Other Services

EC2: Windows mounts—e.g., Z:\data. AWS Managed AD: Authentication—e.g., corp.example.com. CloudWatch: Metrics (e.g., DataReadBytes)—alarm on usage. Backup: Snapshots—e.g., daily to S3. VPC: Private access—e.g., no IGW needed.

Networking Services

AWS networking solutions for connectivity, traffic management, and global content delivery.

Overview

Amazon Virtual Private Cloud (VPC), launched in 2009, is AWS’s core networking service, providing a logically isolated virtual network within the AWS cloud. It’s the foundation for most AWS services—EC2, RDS, Lambda—letting you define IP ranges, subnets, routing, and connectivity. Think of it as your private data center: control access, segment resources, and connect to on-premises or other clouds. From basics (public/private subnets) to advanced (VPC Peering, Transit Gateway), it’s flexible for simple apps or complex enterprises.

Architecture and Core Components

VPC is a regional construct, spanning AZs within a region (e.g., us-east-1). It’s built on AWS’s global network, isolating your resources via virtualization. Key components:

- VPC: The network—e.g.,

10.0.0.0/16(65,536 IPs)—regional scope. - Subnets: AZ-specific segments—e.g.,

10.0.1.0/24(256 IPs)—public (Internet access) or private (isolated). - Route Tables: Traffic rules—e.g.,

0.0.0.0/0to Internet Gateway (IGW)—one per subnet. - Internet Gateway (IGW): Public access—e.g., connects VPC to internet.

- NAT Gateway: Private subnet outbound—e.g.,

nat-123in public subnet, $0.045/hr. - Network ACLs (NACLs): Stateless firewall—e.g., allow port 80 inbound—subnet-level.

- Security Groups: Stateful firewall—e.g., allow SSH from 10.0.0.5—instance-level.

Data flows via AWS’s private backbone—e.g., EC2 in 10.0.1.0/24 to RDS in 10.0.2.0/24—no public internet unless routed via IGW/NAT. Default VPC per region—e.g., 172.31.0.0/16—preconfigured for quick starts.

Features and Configuration

CIDR: Primary—e.g., 10.0.0.0/16—secondary added—e.g., 192.168.0.0/16. Subnets: /28 (16 IPs) to /16—e.g., 10.0.1.0/24 per AZ. Routing: Custom tables—e.g., 10.1.0.0/16 to VPC Peering. Gateways: IGW (free), NAT (HA in AZ)—e.g., $32/month. VPC Peering: Connect VPCs—e.g., us-east-1 to us-west-2, no transitive routing. Transit Gateway: Hub-and-spoke—e.g., 10 VPCs + on-prem, $0.02/GB. Endpoints: Private AWS access—e.g., vpce-s3, $0.01/hr. Limits: 5 VPCs, 200 subnets—soft limits.

Pricing

VPC: Free—core networking costs nothing. NAT Gateway: $0.045/hr + $0.045/GB—e.g., 10 GB/day = $32.40/month. VPC Peering: $0.01/GB (inter-region)—e.g., 100 GB = $1. Transit Gateway: $0.02/GB + $0.05/attachment-hr—e.g., 5 VPCs, 50 GB = $75/month. Endpoints: $0.01/hr + $0.01/GB—e.g., S3 access = $7.30/month. Free tier: None—NAT/Transit adds up.

Networking and Scaling

VPC scales with AWS—millions of IPs. Basics to advanced:

- Basic: Public subnet—e.g., EC2 + IGW,